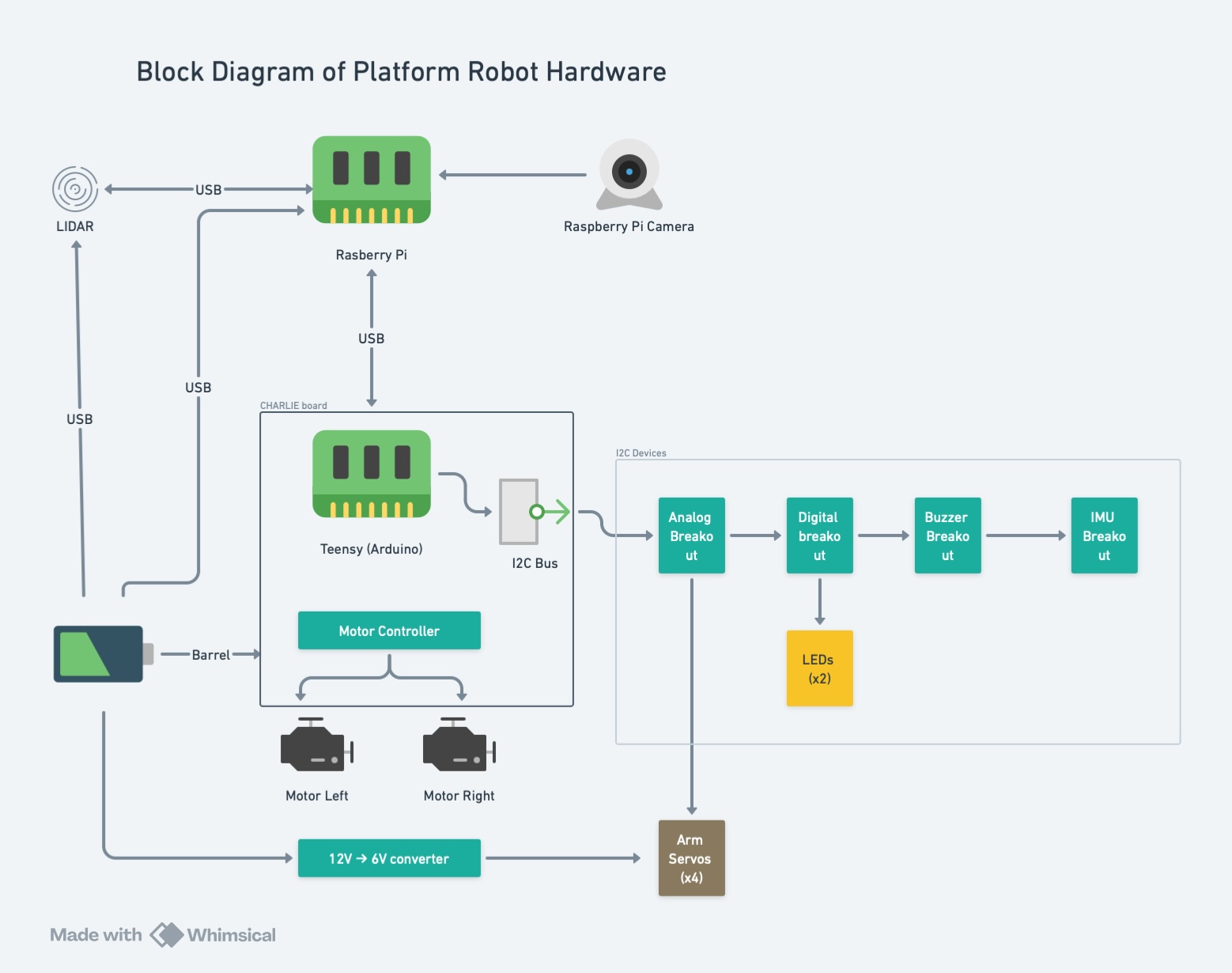

Robot Hardware

- Subsystems are ‘represented’ in the software

- Locomotion (e.g. wheels)

- Actuation (e.g. arms)

- Sensing (e.g. cameras, lidar, buttons, encoders)

- Computing (e.g. single-board computer SBC, microcontroller)

Locomotion

- Holonomic - non-Holonomic

- Holonomic - can move in any direction

- Non-holonomic - can’t move in any direction

- Degrees of freedom

- Ambiguity in defining DoF

2 wheeled vehicles

- Differential drive: Turtlebot3, Platform, Bullet, Branbot

- Turning by different speed on two or more wheels

- Sometimes there is a caster

- Can only move forward and backward or pivot in place

4 wheeled vehicles

- Not up and down or (directly) sideways

- With more than 2 wheels, or a track - skid steering

- Cars have “Akkerman” steering (4 wheels, 2 that can steer)

- 4WD “Mechanum” steering

Quadruped

- Gait - trot, gallop, etc

- Sensing vs. Non Sensing issues

Common case: Differential drive

Lower Level

- Subscribes to

cmd_vel

- Desired translation and rotation speeds

- Reverse kinematics tells us desired rotations per second for each wheel

- Publishes to

odom

- Forward kinematics tells us estimated actual motion (position and speed)

- ‘Dead Reckoning’

Supporting hardware

- Two wheels + 1 or 2 casters

- Each wheel has motor and encoder

- Motor will go “faster” when given more current

- Encoder counts up for each rotation

Algorithm

- PID control to minimize delta between desired and actual

- Forward kinematics

Sensors

Lidar

- Rotates and uses a laser to measure distance to nearest obstacle

- Returns a vector of values corresponding to the directions

- LiDAR’s can operate in 2d (scan) or 3d (point cloud), ours is 2d

Limitation Operates in a plane, like a disk. Can't detect an obstacle above or below the plane!

Visual Cameras

- Webcam sees a color picture

- Matrix of dots, each dot has a color

Dot[x,y] = {r,g,b}- Data can be processed for example with

opencv

- A lot of data and a lot of processing

- Bandwidth limits

Depth Cameras

- Like a Microsoft Kinect

- In addition to

{r,g,b} also has distance so we get {r,g,b,d}

- Useful for getting range measurements of an object detected through camera

Computation

- Distributed environment, typically:

- roscore running onboard. on Raspberry pi

- Some additional ROS nodes running on Raspberry Pi

- More ROS nodes running on a laptop or other computer

- Workload is truly distributed across all of those

- Eventually we may have to have a much beefier computer running all three

- This would make the robot much more independent

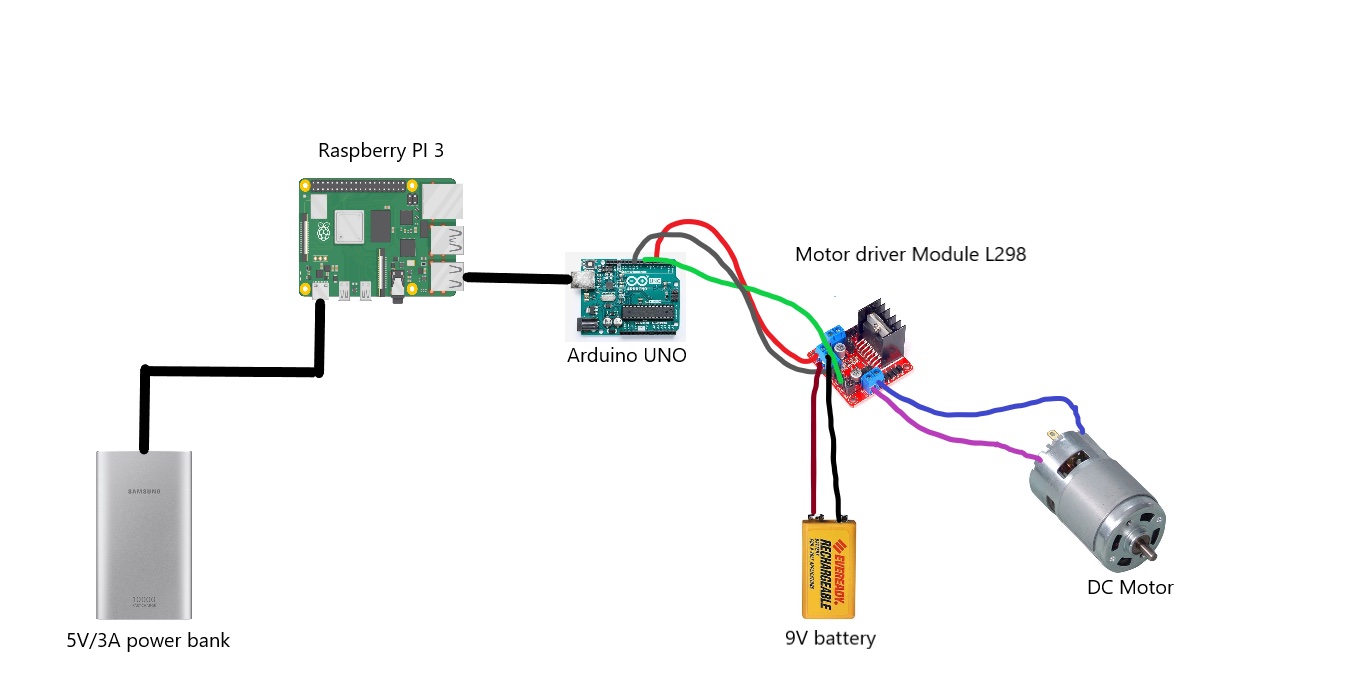

Our actual Robots

- Differential Drive - 2 powered wheels and one (or two) casters

- Arduino or Arduino-like computer to control the motor.

- Motor Controller and IMU directly connected to Arduino

- Raspberry Pi which runs Ubuntu and ROS, connected to Arduino via a USB cable

- Lidar connected to Raspberry Pi connected via USB cable

Diagram