Introduction

- How can the robot follow lines on the ground?

- Acquire images

- Filter the images

- Steer the robot

Acquiring Images

- Message:

sensor_msgs/Image

- Run Gazebo to simulate a robot with a camera

- Since the TB3 Waffle has a camera, we will use those models, and then run gazebo

# Set model to WAFFLE!!!

$ export TURTLEBOT3_MODEL="waffle"

$ export TB3_MODEL="waffle"

$ roslaunch turtlebot3_gazebo turtlebot3_world.launch

$ rostopic list | grep /camera

- Note all the camera related topics being published

- The image itself can be found under

/camera/rgb/image_raw

../compressed is good for sending the images over wifi../theora applies even more compression

Look at image

- Lets visualize the image

- Choose from each of the topics relating to the camera

- Move the robot in Gazebo and watch the picture change

$ rqt_image_view

Run the simplest program

$ rosrun samples follower.py

$ rosnode samples follower

--------------------------------------------------------------------------------

Node [/follower]

Publications:

* /rosout [rosgraph_msgs/Log]

Subscriptions:

* /camera/rgb/image_raw [sensor_msgs/Image]

* /clock [rosgraph_msgs/Clock]

Services:

* /follower/get_loggers

* /follower/set_logger_level

contacting node http://10.0.0.95:39103/ ...

Pid: 17179

Connections:

* topic: /rosout

* to: /rosout

* direction: outbound

* transport: TCPROS

* topic: /clock

* to: /gazebo (http://10.0.0.95:42857/)

* direction: inbound

* transport: TCPROS

* topic: /camera/rgb/image_raw

* to: /gazebo (http://10.0.0.95:42857/)

* direction: inbound

* transport: TCPROS

- First section is information determined from roscore about what /follower is doing

- Second section is information directly from the /follower node about it’s connections

- We see that it is publishing to /rosout

- And is subscribed to

/clock (from gazebo)

- And

/camera/rgb/image_raw (from gazebo too)

- Lets find out the frame rate (simulated by gazebo)

rostopic hz /camera/rgb/image_raw

subscribed to [/camera/rgb/image_raw]

WARNING: may be using simulated time

average rate: 10.204

min: 0.019s max: 0.131s std dev: 0.03037s window: 10

- So, we are receiving about 10 images per second

- Which is why my computer is so slow :)

Race Course

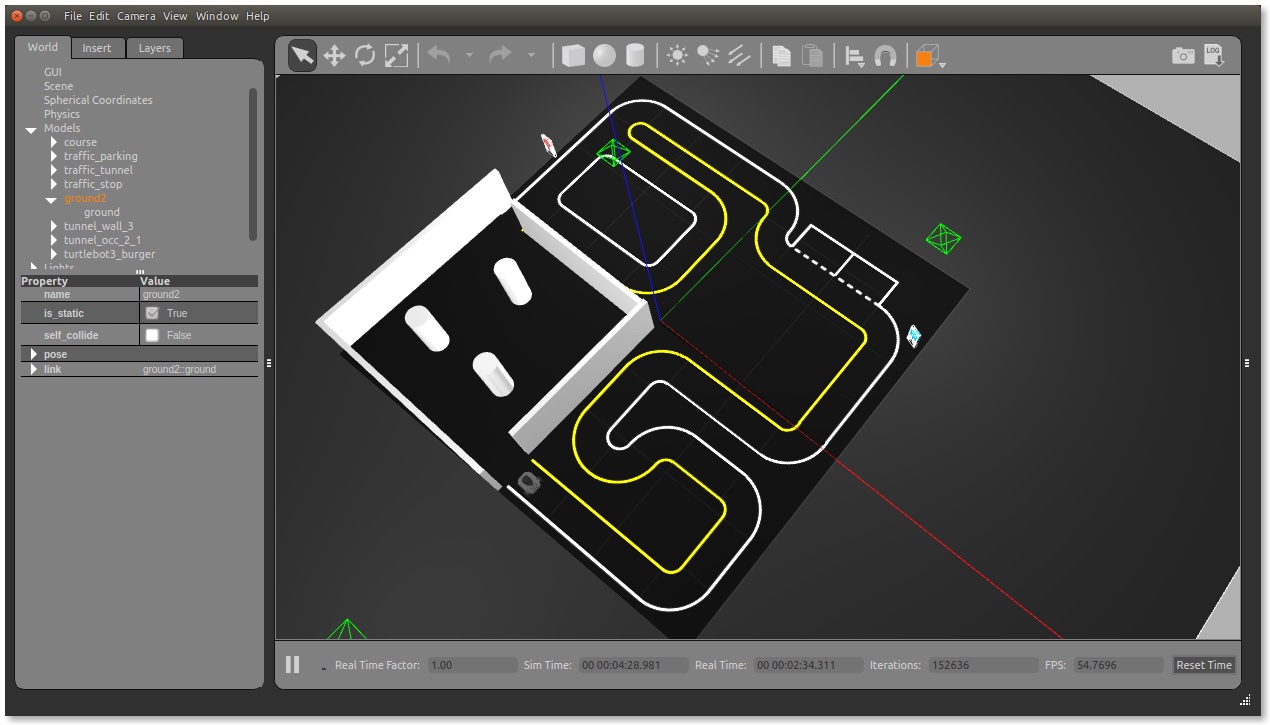

NB: We diverge from the book a little. Note different roslaunch

- Kill everything from before

- Load a new world into gazebo

$ roslaunch turtlebot3_gazebo turtlebot3_autorace.launch

- You will see in gazebo, a TB3 Waffle on a little racetrack

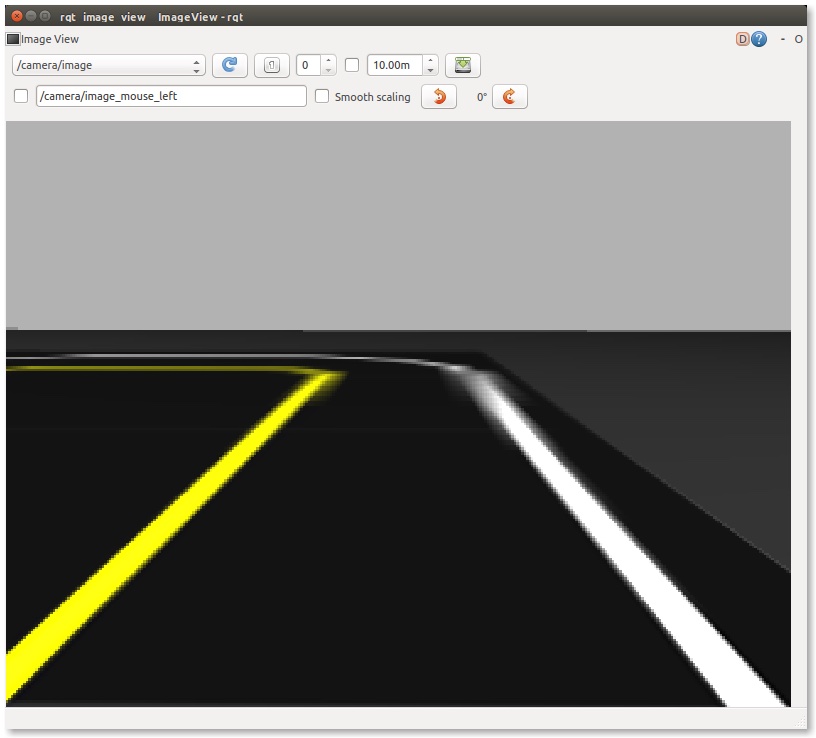

- Here’s the track, and the view from the camera:

Introducing OpenCV

Detecting the yellow line

- Image and Pattern Recognition is arbitrarily complex

- Here we will take a simplistic approach

- An interesting relevant link: Tracking Colored Objects

- Note that we customized the values to recognize yellow as

HSV [40,0,0]:[120,255,255]

- These values are very rough and could be improved

$ rosrun samples follower_color_filter.py

- Will display three windows: the original image, the mask and the original as masked

- We will really only need the masked image

Now, use yellow line to pick a direction

- First we take a 20 pixel slice near the bottom of the filtered image, which contains ony the yellow line

- We process the image further to locate the “centroid”

- And we paint a red circle there to show where that is

- Notice that while the image is still, its actually being regenerated 10 times per second, once for each frame. Move the robot manually in Gazebo to notice this.

$ rosrun samples follower_line_finder.py

Actually following the line

- We will use the location of the centroid

- We offset it because the yellow line is not in the middle of the road

- We move the robot forward and give it a little turn

- Based on how far from the middle the centroid is

- Notice this is a PID controller!

$ rosrun samples follower_p.py