Logistics

- Oct 29: Team Project Proposals

- Oct 31: OS Quiz on October 31

- Oct 31: Line Follower Programming Assignment

Computer Vision

Seeing ==? Vision

- We focus here on cameras

- Ignore other kinds of “seeing” such as touch sensors or LIDAR.

- A lot more than taking pictures

- Can be used for:

- Mapping

- Localization

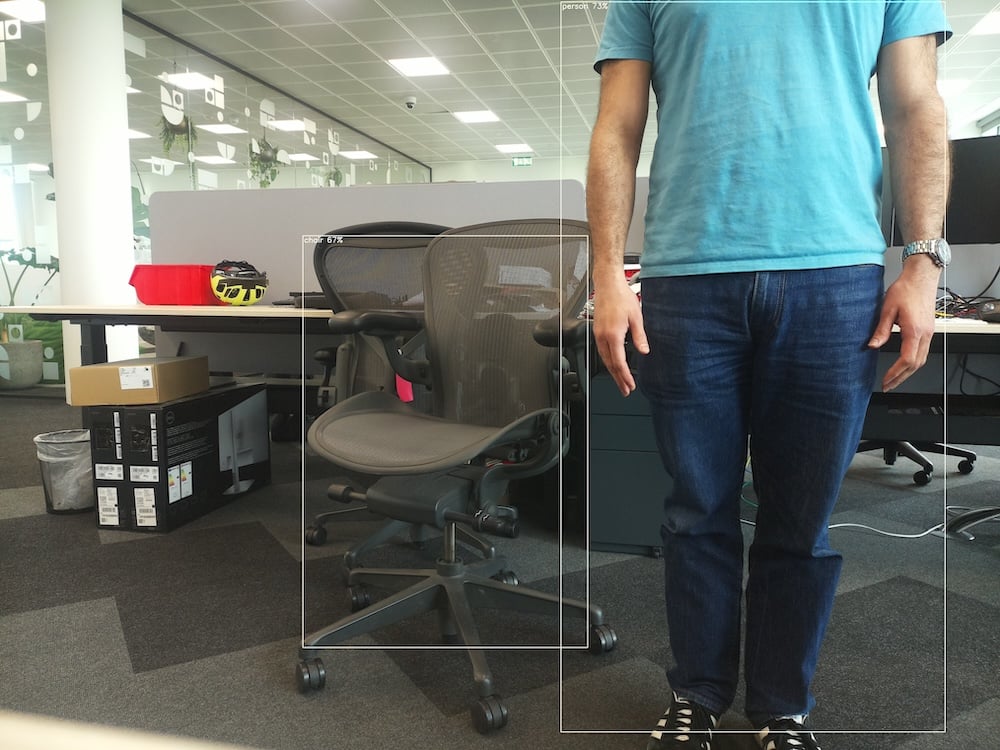

- Object recognition

- Trajectory Estimation

- Driver Distraction

- Redundancy

Camera Types: Regular (webcam-like)

- RGB images

- Video is basically a stream of images

- Frame rate: How many images sent per second

- The higher, the more bandwidth and CPU is used

- Usually it is treated just that way

- Each image is analyzed individually

Camera features and characteristics

- Field of view (wide angle, narrow angle)

- Mount position (pose)

- Stationary or movable

- Means of connection to the robot

Types of cameras

- Basic RaspiCam (webcam-like)

- Mobile phone camera

- Depth cameras

- Infrared cameras

- “AI Cameras”

Considerations

- Resolution of image

- Power requirements

- Fixed direction or sometimes a swivel

- ROS needs to know how the cameras position and direction relates to the overall robot base

- Another job for TFs

- What would happen if this information is incorrect?

- Where do the images have to be “sent” to?

- Compression factors

- Where is the image processed

- Wifi and other bandwidth

Connections

- USB, direct to Raspberry Pi

- Image needs to be viewed through unix utility

- Which is not always easy

- Bandwidth and speed of connection

Role of TFs

- What is the likely TF that would be needed?

- What would that tell us?

Computer Vision (CV)

- Recognizing: faces, locations, fiducials, gestures

- Image processing: filtering colors, isolating shapes etc.

- Machine Learning (ML): Statistical analysis of tagged images

Code

NoteBurger TB3 in Gazebo does not have a camera! Must use Waffle

roslaunch samples linemission.launch model:=waffle- in

samples, cvexample.py

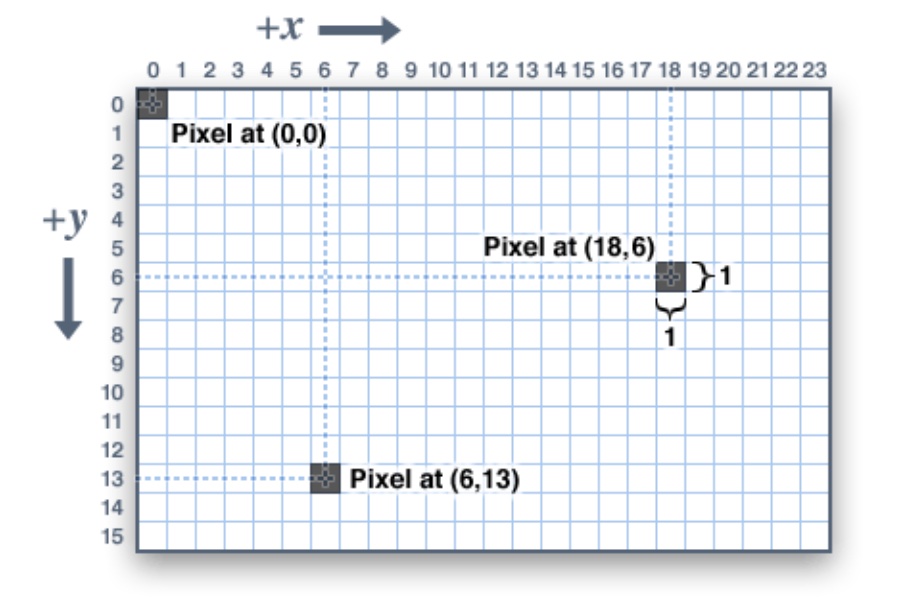

Image Properties

- Images are stored in arrays

- Top left pixel is 0,0

- Bottom right pixel is m,n

- Each element of this array is another array, with the values for that pixel

- And that depends on the image encoding.

Image Encodings

- Usually each component is an integer from 0-255

- Zero means none of that component; 255 means the maximum of that component

- The first three components determine the color

- There can be more components, e.g. distance, or transparency, or others.

- Generally you can freely convert between encodings

Encoding for color: RGB

- RGB - Red, Green, Blue

- Like mixing colored lights. 100% of Red + Blue + Green gives white

Encoding for color: HSV

- HSV - Hue, Saturation, Value

- A little more subtle. You have to practice your eye

- Hue is the color where all colors are arranged in a rainbow from 0 to 255

- Saturation is how “pure” or “saturated” that color is

- Value is how “bright” the color is

- Sometimes its easier to think in terms of HSV

# Simple manipulations

tl_pixel = image[0][0] # top left pixel

r_channel = image[0][0][0] # 'R' channel of top left pixel

cropped_img = image[120:240, 0:320] # crops the image to be only the bottom half

Image processing with OpenCV

- OpenCV is the key library used for image procesing in ROS

- It is totally distinct from ROS but great bridging functions exist

- The current version is OpenCV2

Basics to understand

- Understand representations of images as 2+ dimensional arrays

- Understand that a given image “array” will have image information encoded

- There are different encodings which change what is stored at the innermost array

- Monochrome, Depth, RGB, HSV, and more

- Different OpenCV functions expect and generate images of specific types

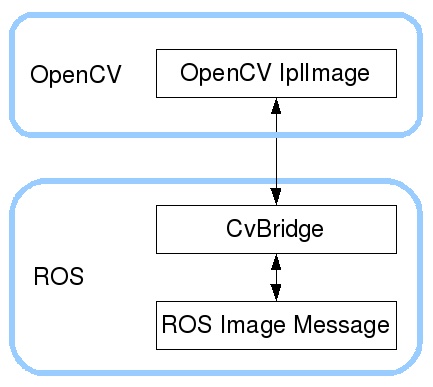

Connecting OpenCV to ROS …

- CvBridge() provides a series of methods that convert from ROS messages to OpenCV2() formats

- And vice versa

- e.g.

CvBridge().imgmsg_to_cv2(msg, desired_encoding='bgr8')

How to think about algorithms

- They are a series of distinct steps (e.g. a

pipeline)

- Often, given an image, transform it, to a different image

- The order really matters

- Sometimes, given an image, analyze it, generate data (a number, an array, a list, etc)

Thank you. Questions? (random Image from picsum.photos)

(random Image from picsum.photos)